The Core Philosophy: The Lakehouse Mindset

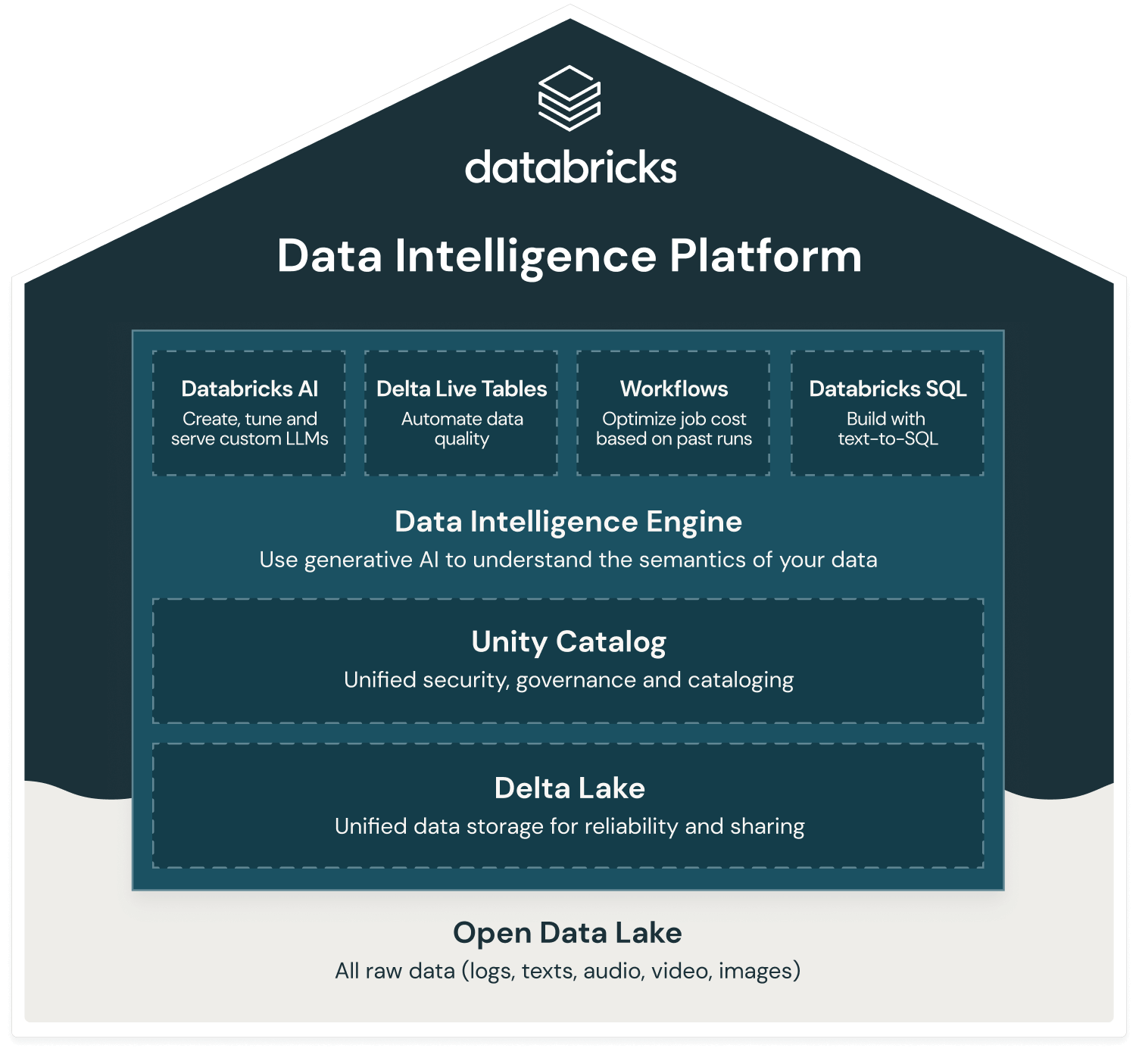

Every exam question is now rooted in the Databricks Data Intelligence Platform philosophy. Understanding its four pillars is the first step to success. These principles work together to create a unified, reliable, and open foundation for all Data and AI workloads.

All data, structured and unstructured, in one place.

Built on open standards like Delta Lake & Apache Spark.

Reliability on the data lake with Delta Lake's features.

Unity Catalog as the unified foundation for security, discovery, and AI.

Decoding the Exams: Associate vs. Professional

While the Associate exam focuses on the "how" of daily tasks, the Professional exam tests the "why" of designing production-grade solutions. The charts below visualize the different skill depths required for each certification.

Data Engineer Associate Focus

Focuses on core implementation skills and foundational concepts.

Data Engineer Professional Focus

Emphasizes advanced design, optimization, and governance.

The Modern Databricks Stack

Mastering the modern stack means understanding how data flows through the Medallion Architecture. This standardized approach ensures data quality and usability, moving from raw ingestion to business-ready insights.

Visualizing the Medallion Architecture

Bronze Layer

Raw, unfiltered data ingested from various sources.

Silver Layer

Cleaned, validated, and enriched data ready for analysis.

Gold Layer

Aggregated data for business intelligence and reporting.

The Big Shift: Exam Updates from Sept 30, 2025

The Databricks certification exams have evolved. The new focus is squarely on the modern, managed ecosystem defined by Unity Catalog and LakeFlow Declarative Pipelines (formerly DLT). The chart below quantifies this shift in emphasis across key topic areas.

Change in Exam Topic Emphasis

Your Path to Success: Final Pro-Tips

Beyond knowledge, a strategic approach to preparation is key. These three pillars will ensure you are ready for the challenges of the exams.

Hands-On Practice

Theory is not enough. Practice building pipelines with LakeFlow Declarative Pipelines (DLT), orchestrating with LakeFlow Jobs, and deploying with Databricks Asset Bundles (DABs).

Read Official Docs

The official documentation is the source of truth for the exams. It's more current and accurate than any third-party resource.

Think in Trade-offs

Especially for the Professional exam, understand the 'why' behind technical decisions. Know the pros and cons of each approach.